Lets check out various different options to build up table with unique Keys along with Performance Comparison.

Preface

One of the reader, Wouter has requested a code snippet on Easily Build a internal table with Unique keys based on various table entries. I thought of just writing it as a code snippet but later on thought of adding the performance aspect of the different techniques.

As we have checked in one of the old article Performance of Using Keys in SELECT with FOR ALL ENTRIES, it important to build an internal table when trying to get entries using FOR ALL ENTRIES. It would be beneficial when you are looking for added advantage.

Various Techniques

Lets check out various techniques which we would compare in the below mention code:

- SORTED table with Unique Keys – In this approach, you declare the ITAB as SORTED with Unique keys for which you want to build the unique key table. All the fields in the table would need to be key fields as part of the Unique keys

- COLLECT – Use Collect to determine unique key fields. Make sure all the fields in the question are non-numeric fields. COLLECT aggregates the field value for non-numeric fields to derive at the total.

- DELETE Adjacent Duplicates – In this approach you append all the lines into the ITAB, SORT and delete the duplicates.

Code Lines

Code lines to compare the performance for these options:

*&---------------------------------------------------------------------* *& Purpose - Performance Comparison for building a unique key table *& Author - Naimesh Patel *& URL - http://zevolving.com/?p=2139 *&---------------------------------------------------------------------* REPORT ZTEST_NP_UNIQUE_KEYS_PERF. * DATA: t_t100 TYPE STANDARD TABLE OF t100. DATA: ls_t100 LIKE LINE OF t_t100. * DATA: lv_sta_time TYPE timestampl, lv_end_time TYPE timestampl, lv_diff TYPE p DECIMALS 5. * SELECT * FROM t100 INTO TABLE t_t100 UP TO 10000 ROWS WHERE arbgb IN ('00','01','B0','B1','B2'). WRITE: / 'Total number of records', sy-dbcnt. *---- * Unique using SORTED table *---- TYPES: BEGIN OF lty_langu, langu TYPE sy-langu, END OF lty_langu. DATA: lt_langu_sorted TYPE SORTED TABLE OF lty_langu WITH UNIQUE KEY langu. DATA: ls_langu LIKE LINE OF lt_langu_sorted. * GET TIME STAMP FIELD lv_sta_time. LOOP AT t_t100 INTO ls_t100. READ TABLE lt_langu_sorted INTO ls_langu WITH KEY langu = ls_t100-sprsl. IF sy-subrc NE 0. ls_langu-langu = ls_t100-sprsl. INSERT ls_langu INTO lt_langu_sorted INDEX sy-tabix. ENDIF. ENDLOOP. GET TIME STAMP FIELD lv_end_time. lv_diff = lv_end_time - lv_sta_time. WRITE: /(40) 'sorted table unique keys', lv_diff. * *------ * Collect entries *------ DATA: lt_langu TYPE STANDARD TABLE OF lty_langu. DATA: ls_langu_1 LIKE LINE OF lt_langu. * GET TIME STAMP FIELD lv_sta_time. LOOP AT t_t100 INTO ls_t100. ls_langu_1-langu = ls_t100-sprsl. COLLECT ls_langu_1 INTO lt_langu. ENDLOOP. GET TIME STAMP FIELD lv_end_time. lv_diff = lv_end_time - lv_sta_time. WRITE: /(40) 'Collect', lv_diff. * *------ * Delete Adjacent Duplicate *------ CLEAR lt_langu. GET TIME STAMP FIELD lv_sta_time. LOOP AT t_t100 INTO ls_t100. ls_langu_1-langu = ls_t100-sprsl. APPEND ls_langu_1 TO lt_langu. ENDLOOP. SORT lt_langu BY langu. DELETE ADJACENT DUPLICATES FROM lt_langu. GET TIME STAMP FIELD lv_end_time. lv_diff = lv_end_time - lv_sta_time. WRITE: /(40) 'SORT and Delete Adjacent Duplicate', lv_diff.

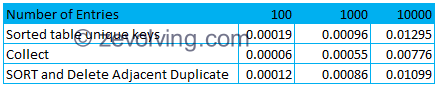

Performance Results

Lets check out the performance impact:

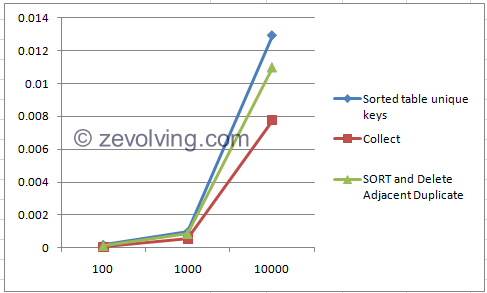

For different number of records in the original table, you would see similar number of the time spent building the unique key table. Clearly the COLLECT is winner here.

On a Graph …

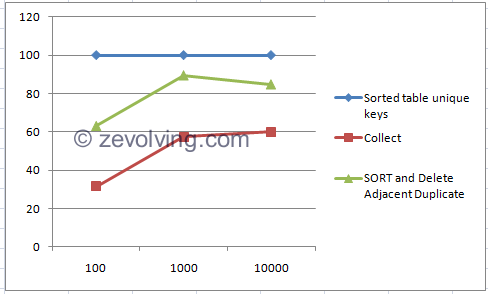

When using the SORTED unique key as 100% …

Conclusion

Using COLLECT is much more faster than using the SORTED Unique Keys or DELETE ADJACENT. Both of the later options has to execute the SORT statements, and system would have to account for that as well.

Do you have any other technique or want to share the performance results?

I seem to remember reading collect uses a hashed key. I use collect whenever possible, and have been re-writing bits where it makes more sense to use a collect.

Great blog Naimesh!.

Great post Naimesh, thx!

Hello Steve,

Yes, you are correct. COLLECT uses temporary Hash administration to append entries when table is Standard Table. ABAP Keyword Collect

Thanks,

Naimesh Patel

Thank you Naimesh!

Once again it is proven that ‘old’ statements like COLLECT are really powerful. I think I read somewhere that collect uses a hashed-table-approach internally.

I have no ABAP system available here and now but I would simplify your sorted table approach using a hashed table and then compare times again:

And, let me mention: For sorted and hashed tables you do not need to specify INDEX. The runtime system will determine the position faster than a READ TABLE statement. If the INSERT .. INTO TABLE is a duplicate key, it will simply return a SY-SUBRC = 4. (refer http://help.sap.com/abapdocu_731/en/index.htm)

Regards

Clemens

Hello Clemens,

You are correct that COLLECT uses temporary Hash algorithm. Since its temporary, any change to the entries would destroy the Hash management and system would treat it with its standard access.

I corrected your code to remove FS as my syntax highlighter is not yet capable of handling the FS in comments. I ran the code with my other results and it your code provided the similar results like COLLECT with 5% +-.

I completely forgot that we don’t need to check on for the entry as INSERT would be able to handle it anyways. Thanks for pointing it out.

Thanks,

Naimesh Patel